As artificial intelligence (AI) continues to permeate digital experiences, product teams are increasingly turning to A/B testing to evaluate AI-driven features. Traditional metrics like click-through rates (CTR) and conversions have long been used to assess performance. However, when it comes to AI systems—especially those that generate content or make context-sensitive decisions—these measures often fall short. The challenge lies in measuring *quality*, an inherently subjective and context-dependent attribute that’s not always reflected in a simple click.

In the age of generative AI, tools like chat-based assistants, AI recommendation engines, and image generators provide outputs that can vary widely in relevance, tone, depth, and value. It’s no longer sufficient to evaluate success solely through direct interaction or surface-level engagement. To truly capture the impact of these technologies, organizations must evolve how they test and interpret AI performance.

Why Traditional A/B Testing Falls Short

At its core, a traditional A/B test is designed to measure user behavior in response to two or more versions of a product or feature variation. This method works well for UI changes, button placements, or pricing strategies because success is often binary—a user either completes the desired action or not.

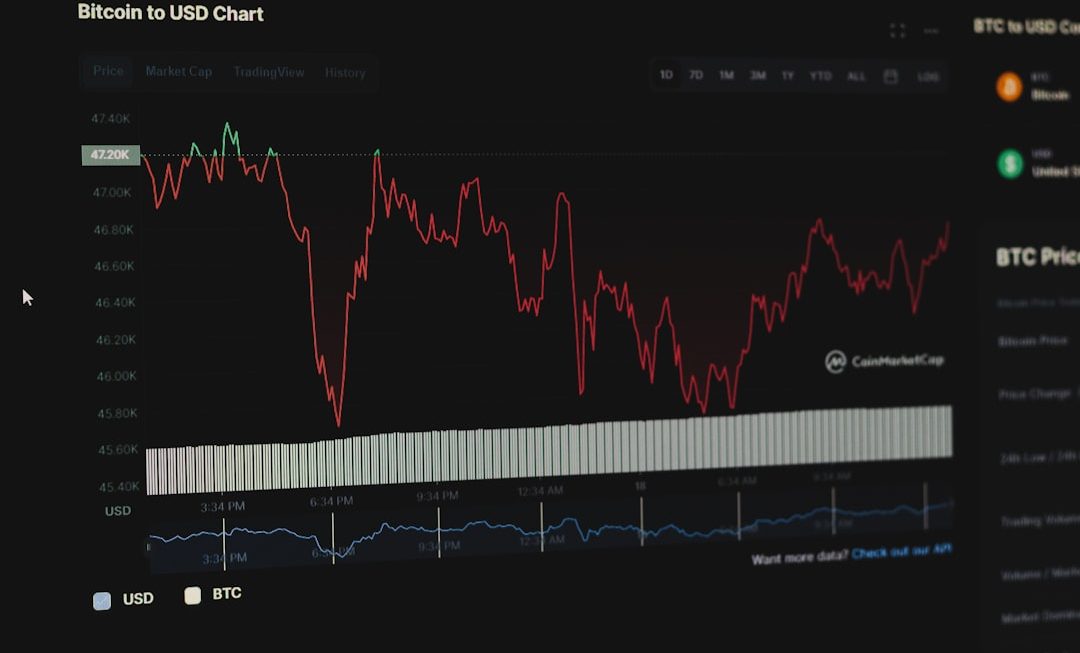

AI, however, introduces ambiguity. A user might click on an AI-generated summary because it’s shorter, but later find it unhelpful. Alternatively, a user may not click at all but still gain valuable information from a visible snippet. In such cases, CTR might paint a misleading picture.

Reframing AI A/B Testing with Qualitative Metrics

To properly assess AI output, teams must expand their metrics beyond the obvious choices. Here are a few strategies that help in testing for *quality* rather than just *engagement*:

- User Satisfaction Scoring: Ask users to rate AI outputs directly. A post-interaction thumbs-up/down or a Likert scale (1–5) rating system can provide valuable feedback straight from the source.

- Human Evaluation: Internal review panels or outsourced human evaluators can assess AI results based on clarity, usefulness, and relevance. This introduces a grounded, contextual understanding of quality that’s difficult to capture via analytics alone.

- Time-on-Task Analysis: For interfaces like AI writing assistants or chatbots, the time a user spends reading or editing text can indicate whether the AI’s suggestions were genuinely helpful or merely accepted to continue workflow.

- Task Completion Rate: Measure whether users successfully accomplish their goals using the AI feature. Did the AI help them find the information, complete the design, or generate the report they needed?

Taken together, these metrics shift the focus from merely *what users clicked* to *how they felt and what they achieved*. Quality now becomes multidimensional—touching on satisfaction, utility, efficiency, and more.

Personalization and Context-Aware Testing

AI systems often tailor responses based on user context, history, or prompts. This makes universal testing harder. A response that satisfies one user may entirely miss the mark for another. Therefore, context-aware segmentation becomes crucial in AI A/B testing.

Product managers and data scientists should define personas or interaction types and analyze results within these buckets. For example:

- First-time users vs. power users

- Short-form queries vs. detailed prompts

- Creative requests vs. factual questions

Moreover, outcome-based evaluation across segments can help identify where one AI model excels while another fails, allowing for more nuanced deployment strategies.

Leveraging Implicit Signals Over Explicit Feedback

Since asking users for feedback at every interaction adds friction, teams can also use *implicit signals* to assess quality. These include:

- Scroll Depth: Did the user scroll past the AI-generated content quickly, or spend time interacting?

- Copy/Paste Tracking: Was the output content copied, reused, or quoted elsewhere?

- Editing Behavior: In AI-generated writing environments, the extent and nature of user edits can reflect how well the content met user expectations.

By combining these behavioral signals with session analytics, teams get a richer picture of utility without overwhelming users with constant feedback requests.

Challenges in Defining Quality

Perhaps the most uncertain aspect of AI A/B testing lies in defining the benchmark for a “high-quality” output. Unlike static UI design, where a crisp button corner or vibrant color might yield universal appeal, AI content varies in accuracy, tone, fluency, and helpfulness depending on the context.

Thus, many companies adopt an iterative testing model:

- Deploy an AI variation to a small user subset.

- Collect both structured (ratings) and unstructured (user comments) feedback.

- Hold weekly or bi-weekly reviews involving cross-functional teams (e.g., content strategists, UX designers, engineers, linguists).

- Quantify the patterns and refine the model accordingly.

AI A/B Testing in Practice: Tools and Infrastructure

Modern testing platforms like Optimizely, Google Optimize (deprecated but formerly influential), and in-house systems now support AI-aware metrics. Data pipelines are increasingly equipped to process not just click data but also action sequences, semantic similarity scores, and user engagement paths.

Moreover, open-source libraries and cloud platforms make it feasible to set up human-in-the-loop systems. For example:

- Label Studio for annotation and qualitative review

- Weights & Biases for model monitoring and experimentation tracking

- OpenAI’s Evals framework for systematic evaluation of model outputs

Testing AI isn’t just about statistics; it’s about infrastructure, human judgment, and aligning AI behavior with real-world expectations.

The Future of A/B Testing in an AI World

As AI continues to evolve, so must the testing methodologies associated with it. The ideal AI A/B test of the future may involve:

- Dynamic test cohorts that adapt based on user behavior

- In-model feedback loops where user corrections train the system in real-time

- Ethical layers to evaluate potential biases, misinformation, and fairness

Ultimately, measuring the success of AI isn’t just a matter of testing “A vs. B.” It’s about continuously redefining what *good* looks like in a world where machine-generated content shapes experiences, decisions, and knowledge.

FAQ: AI A/B Tests – Measuring Quality Beyond Clicks

-

Q: Why don’t click-through rates (CTR) always reflect AI quality?

A: CTR measures engagement but not satisfaction or usefulness. Users might click something because it looks interesting, not because it’s helpful or correct. -

Q: What are some alternative metrics for AI A/B testing?

A: Useful alternatives include user satisfaction scores, human evaluations, task completion rate, and behavioral signals like scroll depth and copy/paste events. -

Q: Is human evaluation scalable for large A/B tests?

A: It’s challenging, but possible with representative sampling and annotation tools like Label Studio. Initial automated filtering followed by human review also helps. -

Q: Can AI A/B tests be personalized?

A: Yes, and they should be. Context-aware segmentation ensures meaningful comparisons by grouping users based on behavior and goals. -

Q: How often should AI variations be tested and refined?

A: Regularly—weekly or bi-weekly review cycles work well. Frequent iteration ensures that the AI aligns accurately with evolving user expectations.