With the exponential increase in online content, users today demand quick, accurate, and contextual search capabilities, especially on content hubs. Traditional keyword-based search methods are no longer sufficient to meet user expectations. Enter live search — an interactive format that provides instantaneous results while a user is typing. At the core of live search is the essential and complex process of query understanding.

What Is Query Understanding?

Query understanding refers to the process by which a search engine or application interprets the user’s input — often incomplete or imprecise — to determine their actual intent and deliver the most relevant results. This involves dissecting and analyzing the user’s query through various natural language processing (NLP) techniques, AI models, and contextual algorithms.

In the case of content hubs, especially those hosting diverse content like articles, videos, FAQs, and whitepapers, query understanding becomes crucial because it needs to filter through different content types and taxonomies to find the most meaningful match.

How Query Understanding Powers Live Search

Unlike conventional search engines which process the full input after the user clicks “search,” live search operates in real-time, updating results dynamically as the user types. This requires the backend system to continually parse and interpret partial inputs with each keystroke, adding a layer of complexity.

To manage this, live search depends heavily on query understanding methodologies that include:

- Intent Detection: Determining what the user wants to achieve — whether it’s finding a specific article, researching a topic, or watching a video.

- Entity Recognition: Identifying named entities such as places, people, or specific product names within the search term.

- Context Incorporation: Leveraging information about user behavior, previous searches, or session analytics to provide personalized results.

Why Live Search Matters on Content Hubs

For large content repositories, delivering relevant, fresh, and valuable content is a top priority. Live search enhances user experience by providing:

- Instantaneous feedback: Speeds up discovery, increasing user satisfaction and engagement.

- Improved discoverability: Surfaces hidden or older content users may not have found otherwise.

- Reduced bounce rates: Users are more likely to stay if they quickly find what they need.

Content hubs like educational websites, corporate knowledge bases, and multimedia archives benefit significantly from live search as it encourages exploratory behavior — sparking curiosity and keeping users engaged.

Challenges in Query Understanding

Despite its promise, implementing high-quality query understanding in live search comes with notable challenges, including:

- Ambiguity: Short queries can be vague and hard to interpret accurately. For example, does “Apple” refer to the fruit or the tech company?

- Spelling Variations or Typos: Users often mistype or use different spelling standards (e.g., American vs British English).

- Sparsity of Data: New or niche queries might not have enough data for the algorithm to make informed guesses.

- Multiple Languages: Content hubs serving global audiences need multi-lingual NLP support, adding another layer of complexity.

Strategies to Improve Query Understanding

Addressing the above challenges requires a multifaceted approach. Here are several proven strategies that improve query understanding significantly:

- Autocomplete and Autosuggest: By predicting the rest of the user’s query, this feature reduces ambiguity and helps guide searches toward intent-rich inputs.

- Query Rewriting or Expansion: Techniques like synonym substitution or appending related terms improve the quality of searches, especially for sparse queries.

- Semantic Search and Word Embeddings: Leveraging AI models like BERT or Word2Vec can help map user queries to conceptually similar content, not just keyword matches.

- Intent Classification Models: Through deep learning, search engines can categorize search terms under intents like “informational,” “navigational,” or “transactional.”

- Feedback Loops: Systems that learn from user behavior (click-throughs, time spent on results) can self-optimize over time.

Using Data to Fine-Tune the Search Experience

Live search engines on content hubs can harness user interaction data to improve their understanding of future queries. This includes:

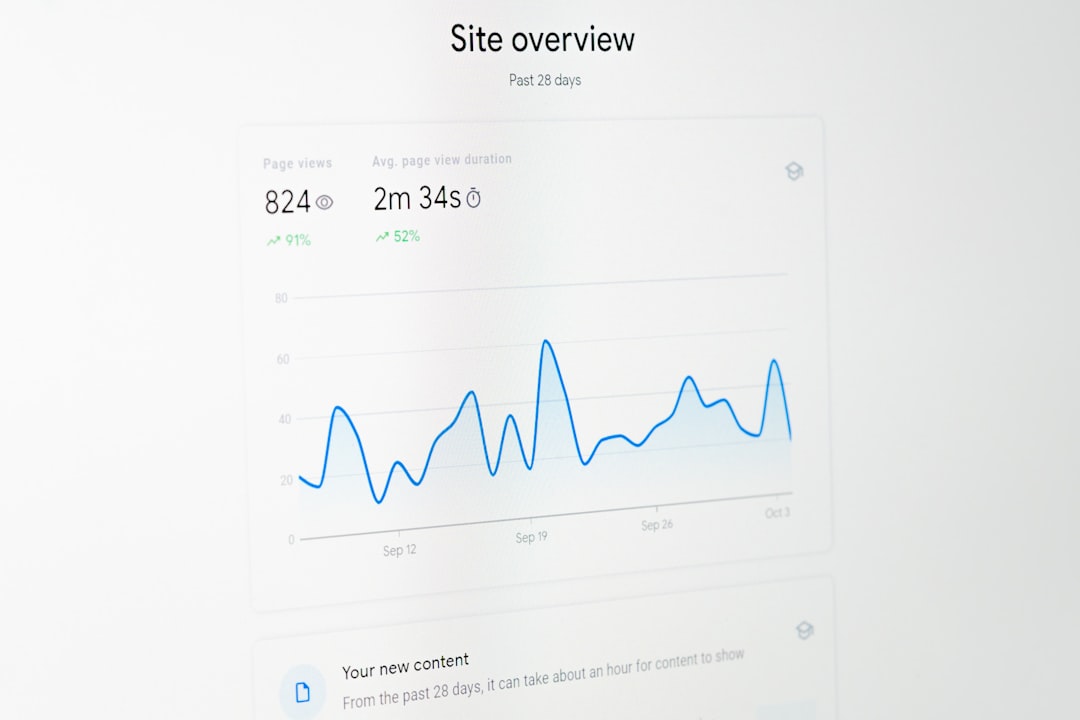

- Clickstream Data: Tracks how users interact with search results to determine what results satisfied specific queries.

- Search Abandonment Metrics: If users don’t click on any search result, it may indicate poor result relevance.

- A/B Testing Different Models: Experimenting with various NLP configurations or ranking algorithms to identify the best-performing setup.

The Role of Taxonomy and Metadata

A well-organized content structure plays a significant role in allowing query understanding to function correctly. Clear taxonomy and enriched metadata enable faster and more accurate matching of queries to content. Use of tags, categories, content types, and author information all contribute to better search relevance.

For example, if a user types “best practices for remote teams,” a well-tagged article under category “Remote Work,” written by a known author, and enriched with the right keywords is likely to appear at the top — even if the keywords don’t match word-for-word.

Real-World Applications

Many organizations are already applying query understanding in their content hub experiences, to great success:

- E-learning platforms: Use live search with semantic understanding to connect students with the right learning materials, even based on vague or partially written topics.

- Knowledge bases: Companies use live search to guide employees or customers to FAQs, troubleshooting guides, or policy documents quickly and efficiently.

- Healthcare repositories: Help users understand symptoms or find relevant treatment guidelines using highly specialized medical vocabulary.

In all these cases, live search fueled by deep query understanding brings relevance, accuracy, and speed to the forefront.

Future of Query Understanding

With advancements in AI and machine learning, the future of live search is promising. We can expect:

- Context-aware assistants: Systems that understand the broader context of the user’s journey — across websites or sessions — to offer hyper-relevant results.

- Voice-integrated query parsing: As voice search becomes more prevalent, NLP models will need to handle conversational queries with accuracy.

- Emotionally aware search: AI might one day detect the emotional tone of a query and tailor content delivery to match it appropriately.

Conclusion

Live search on content hubs represents a shift toward a more dynamic, intelligent, and responsive user experience. At the core of this transformation lies the science and art of query understanding. From semantic models to autocomplete suggestions and user interaction feedback loops, every component works together to unravel user intent.

By focusing on improving query comprehension, content creators, developers, and UX designers can ensure that the wealth of information in content hubs is not only accessible but also meaningful — helping users to find the right knowledge at the right time.

In a world overflowing with content, empowering users with smarter search is not a luxury — it’s a necessity.