Artificial intelligence systems are often evaluated based on measurable indicators such as accuracy, precision, recall, and inference speed. Yet behind these headline numbers lies a less visible phenomenon that can quietly distort model behavior: peephole AI noise. Although not always labeled explicitly in academic literature, this term is increasingly used in technical discussions to describe subtle, localized disturbances in model perception that alter decision pathways without triggering obvious system failures. Understanding this concept is critical for building and deploying reliable AI systems in high-stakes environments.

TLDR: Peephole AI noise refers to small, localized distortions in input data or internal model representations that disproportionately influence predictions. It often emerges from limited receptive fields, biased sampling, or feature over-amplification inside neural networks. These distortions can degrade model accuracy, increase variance, and reduce reliability in real-world conditions. Identifying and mitigating peephole noise improves generalization and operational stability.

What Is Peephole AI Noise?

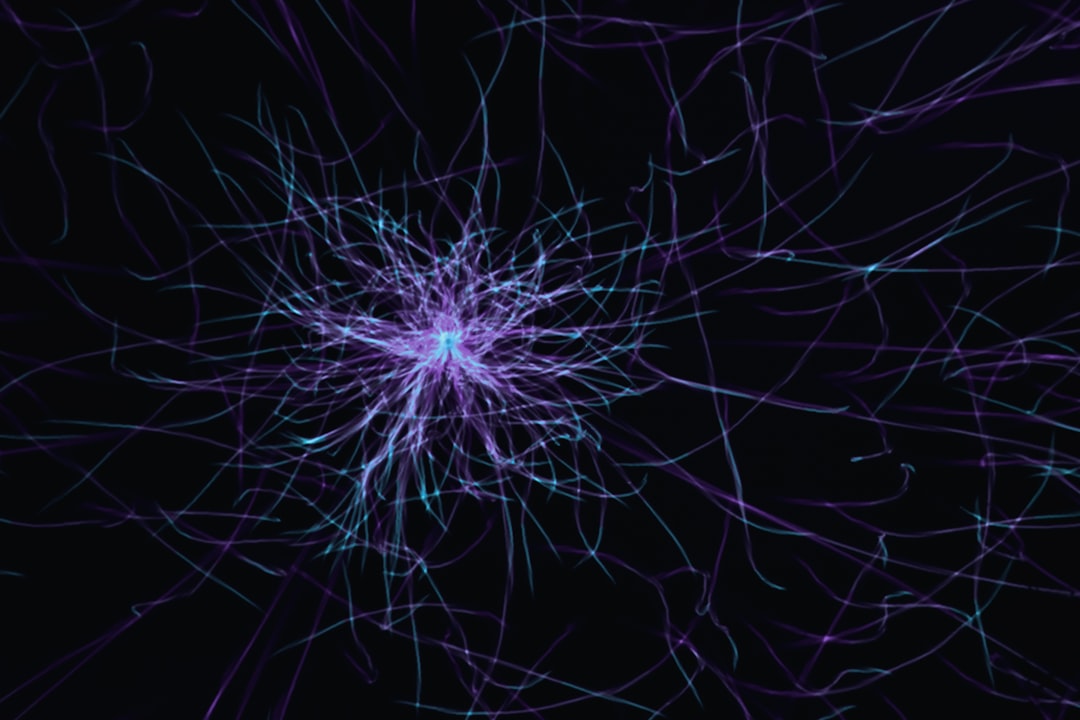

Peephole AI noise can be defined as a form of localized informational distortion that occurs when a model relies too heavily on narrow or incomplete “views” of input data. Instead of processing the broader contextual landscape, the model effectively looks through a “peephole” — focusing on limited feature regions that may not represent the true signal.

This phenomenon often manifests in:

- Convolutional neural networks (CNNs) with constrained receptive fields

- Recurrent neural networks (RNNs) and LSTMs using peephole connections improperly calibrated

- Transformer-based systems when attention collapses toward narrow token clusters

Unlike traditional random noise, which is stochastic and often addressable via smoothing or averaging, peephole AI noise is structural. It stems from how the model processes information rather than from random corruption of data.

Technical Explanation: How Peephole Noise Emerges

To understand the mechanics, consider how modern machine learning models extract features. Neural systems operate through hierarchical abstraction:

- Lower layers detect primitive patterns (edges, tokens, signals).

- Intermediate layers combine these into representations.

- Higher layers perform classification or prediction.

Peephole AI noise emerges when one of these hierarchical levels disproportionately amplifies a narrow feature subset while suppressing broader contextual signals.

1. Receptive Field Constraints

In convolutional models, the receptive field determines how much of the input contributes to a given activation. If receptive fields are too small relative to the problem domain, the model may optimize for micro-patterns while ignoring global structure.

Mathematically, if:

f(x) = g(hlocal(x))

where hlocal(x) extracts localized features, and g aggregates them, an overreliance on hlocal introduces bias because long-range dependencies are underrepresented. The model fits to partial structure instead of full signal distribution.

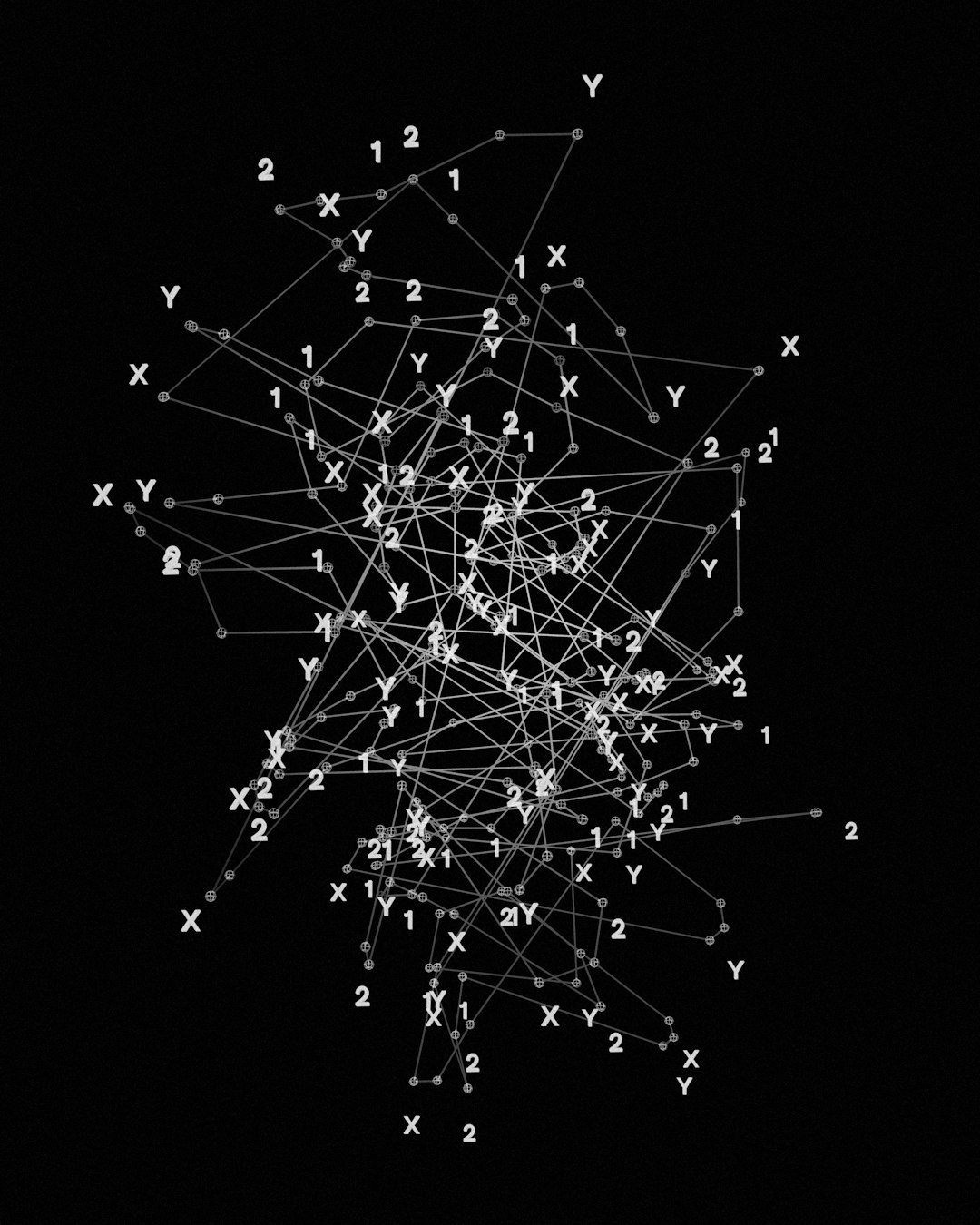

2. Attention Collapse in Transformer Architectures

In transformer-based systems, self-attention mechanisms assign weights to tokens:

Attention(Q, K, V) = softmax(QKT / √d) V

If the softmax distribution becomes narrowly peaked, attention mass concentrates around a small subset of tokens. This “attention collapse” acts like a peephole, filtering out alternative contextual cues. The resulting output may be confident but fragile.

3. Overfitting to Salient Artifacts

Peephole noise can also arise from training data that contains subtle but persistent artifacts. The model may latch onto watermark fragments, background color gradients, or token position biases. These artifacts are not semantically meaningful, yet they become predictive shortcuts.

Three Primary Causes of Peephole AI Noise

While many factors contribute to localized model distortion, three causes are particularly common in production systems.

1. Biased or Narrow Training Data

When training data lacks variability, the model learns to associate outcomes with narrow contextual cues. For example:

- Medical imaging datasets from a single device manufacturer

- Speech recognition data from limited dialect groups

- Fraud detection trained on outdated transaction patterns

The model effectively views the world through a constrained “peephole” created by sampling bias. During deployment, this narrow focus fails under distribution shift.

Technical impact: Shrinking of the effective hypothesis space and increased empirical risk minimization bias relative to real-world distributions.

2. Architectural Imbalance

Model design decisions can unintentionally encourage peephole behavior:

- Excessive pooling layers reducing spatial diversity

- Inadequate positional encoding scaling

- Overuse of dropout in early layers

Such configurations distort gradient signals during backpropagation. Gradients reinforce highly salient features while diminishing weaker but informative signals. The resulting optimization landscape favors local shortcuts over global coherence.

Technical impact: Increased curvature in loss surface around narrow feature minima, leading to brittle convergence.

3. Loss Function Misalignment

A poorly chosen objective function can incentivize narrow correlations. For instance:

- Accuracy-only optimization ignoring calibration

- Cross-entropy without regularization under class imbalance

- Reward maximization in reinforcement learning with sparse feedback

The optimizer will exploit easily accessible predictive cues to reduce immediate loss. Over time, the model amplifies shortcut features — another form of peephole noise.

Image not found in postmetaImpact on Model Accuracy and Reliability

Peephole AI noise does not always reduce training accuracy. In fact, it may temporarily improve it. The true damage appears under evaluation across broader conditions.

1. Reduced Generalization

Because the model depends on narrow signals, its performance drops sharply when those signals shift or disappear. This leads to:

- Higher generalization error

- Performance instability across domains

- Sensitivity to adversarial perturbations

Generalization gap widens because the model has not learned invariant structure.

2. Increased Variance in Predictions

Peephole-focused systems often display high output variance for small input changes. A minor alteration outside the narrow focus region can drastically alter prediction confidence.

This destabilizes:

- Autonomous vehicle perception systems

- Financial risk scoring engines

- Medical diagnostic classifiers

3. Calibration Breakdown

Calibration refers to alignment between predicted probabilities and observed outcomes. Peephole noise distorts probability distributions because confidence becomes anchored to localized artifacts.

A model might output 0.92 probability for a prediction based on a single exaggerated feature, despite weak holistic evidence. This miscalibration erodes trust in AI systems.

Methods to Detect Peephole AI Noise

Professionals can identify this issue through systematic auditing:

- Saliency map analysis to reveal over-concentration of attention

- Feature ablation tests to measure dependency on narrow signals

- Cross-domain validation to detect hidden bias

- Adversarial stress testing to evaluate robustness

Visualization tools often reveal suspicious clustering of attention around irrelevant regions, confirming localized overemphasis.

Mitigation Strategies

Reducing peephole AI noise requires both data-centric and model-centric approaches.

Data Improvements

- Expand dataset diversity across environments

- Introduce counterfactual examples

- Actively remove spurious artifacts

Architectural Adjustments

- Increase receptive field size or use multi-scale feature extraction

- Incorporate residual connections for context preservation

- Regularize attention distributions

Objective Function Refinement

- Add calibration-aware losses

- Apply robust optimization techniques

- Use distributionally robust training methods

These measures help ensure the model learns structurally meaningful patterns rather than shortcut features.

Why This Matters in High-Stakes AI

In low-risk consumer applications, minor accuracy fluctuations may be tolerable. In healthcare, finance, autonomous systems, and national infrastructure, they are not.

Peephole AI noise creates a dangerous illusion of reliability: models appear highly accurate during validation yet fail unpredictably in deployment. The problem is subtle, often invisible during routine evaluation, and deeply embedded within architecture and data interactions.

As AI systems scale in scope and influence, addressing structural noise becomes not just a performance optimization issue but a governance and safety imperative.

Conclusion

Peephole AI noise is a structural distortion phenomenon in which models overfocus on localized signals, suppressing broader contextual understanding. It frequently arises from biased data, architectural imbalance, and loss function misalignment. While it may not always degrade training performance, it undermines generalization, calibration, and reliability in real-world conditions.

Serious AI development requires deliberate efforts to detect and mitigate this form of noise. Through diversified datasets, balanced architectures, robust objective design, and rigorous validation practices, organizations can build systems that perceive not through a peephole, but through a wide and reliable lens.